- Number 416 |

- June 23, 2014

Supercomputers help answer the big questions about the universe

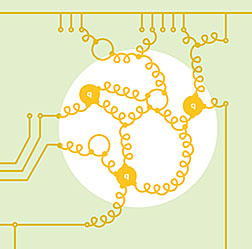

The proton is a complicated blob. It is

composed of three particles, called quarks,

which are surrounded by a roiling sea of

gluons that "glue" the quarks together.

The proton is a complicated blob. It is composed of three particles, called quarks, which are surrounded by a roiling sea of gluons that "glue" the quarks together. In addition to interacting with its surrounding particles, each gluon can also turn itself temporarily into a quark-antiquark pair and then back into a gluon.

This tremendously complicated subatomic dance affects measurements that are crucial to answering important questions about the universe, such as: What is the origin of mass in the universe? Why do the elementary particles we know come in three generations? Why is there so much more matter than antimatter in the universe?

A large group of theoretical physicists at U.S. universities and DOE national laboratories, known as the USQCD collaboration, aims to help experimenters solve the mysteries of the universe by computing the effects of this tremendously complicated dance of quarks and gluons on experimental measurements. The collaboration members use powerful computers to solve the complex equations of the theory of quantum chromodynamics, or QCD, which govern the behavior of quarks and gluons.

The USQCD computing needs are met through a combination of INCITE resources at the DOE Leadership Class Facilities at Argonne and Oak Ridge national laboratories; NSF facilities such as the NCSA Blue Waters; a small Blue Gene/Q supercomputer at Brookhaven National Laboratory; and dedicated computer clusters housed at Fermilab and Jefferson Lab. USQCD also exploits floating point accelerators such as Graphic Processing Units (GPUs) and Intel's Xeon Phi architecture.

With funding from the DOE Office of Science SCIDAC program, the USQCD collaboration coordinates and oversees the development of community software that benefits all lattice QCD groups, enabling scientists to make the most efficient use of the latest supercomputer architectures and GPU clusters. Efficiency gains are achieved through new computing algorithms and techniques, such as communication avoidance, data compression and the use of mixed precision to represent numbers.

The nature of lattice QCD calculations is very conducive to cooperation among collaborations, even among groups that focus on different scientific applications of QCD effects. Why? The most time-consuming and expensive computing in lattice QCD—the generation of gauge configuration files—is the basis for all lattice QCD calculations. (Gauge configurations represent the sea of gluons and virtual quarks that represent the QCD vacuum.) They are most efficiently generated on the largest leadership-class supercomputers. The MILC collaboration, a subgroup of the larger USQCD collaboration, is well known for the calculation of state-of-the-art gauge configurations and freely shares them with researchers worldwide.

Specific predictions require more specialized computations and rely on the gauge configurations as input. These calculations are usually performed on dedicated computer hardware at the labs, such as the clusters at Fermilab and Jefferson Lab and the small Blue Gene/Q at BNL, which are funded by the DOE Office of Science ‘s LQCD-ext Project for hardware infrastructure.

With the powerful human and computer resources of USQCD, particle physicists working on many different experiments—from measurements at the Large Hadron Collider to neutrino experiments at Fermilab—have a chance to get to the bottom of the universe’s most pressing questions.—Jim SimoneSubmitted by DOE’s Fermilab